Data and Artificial Intelligence (AI)

Once considered futuristic science fiction, data and AI are now a reality in so many of our daily lives – sometimes without us even knowing it.

That’s why, as the Civic Data Cooperative (CDC), we are helping residents to better understand what data and AI really are. While also working with the NHS, local authorities and other public sector organisations, business and academic organisations to help them use data for good.

When used and shared responsibly, data and AI can improve our local services and solve big problems.

But of course, it can feel hard to understand or to feel like your data should be private or isn’t ‘big’ enough to play a part in making a difference.

So, let’s start with the basics.

What is data?

Data is information collected in different forms for many purposes. It includes things like numbers, text, images, sound, and objects.

What is AI?

AI is using computers to create technology that can understand, explain, and create things. Sometimes that means AI thinks like a human (Stryker & Kavlakoglu, 2024).

What is an algorithm?

An algorithm is a set of rules or steps to finish a task (National Library of Medicine, 2022). Algorithms are the building blocks of AI and computing. We can train AI to develop its own rules based on patterns in data.

An example of data, AI and an algorithm in action

Without even realising it, you’re likely already seeing this play out in day-to-day life.

For example, if you are part of a supermarket loyalty card; each time you shop and scan your loyalty card, a computer takes that data and uses algorithms to understand your shopping habits. This then triggers an output to provide you with a discount voucher for a product you regularly purchase or maybe haven’t purchased in a while.

Don’t forget, if you use Alexa® or Siri®, you have already integrated AI into your daily routine.

How we plan to take it further

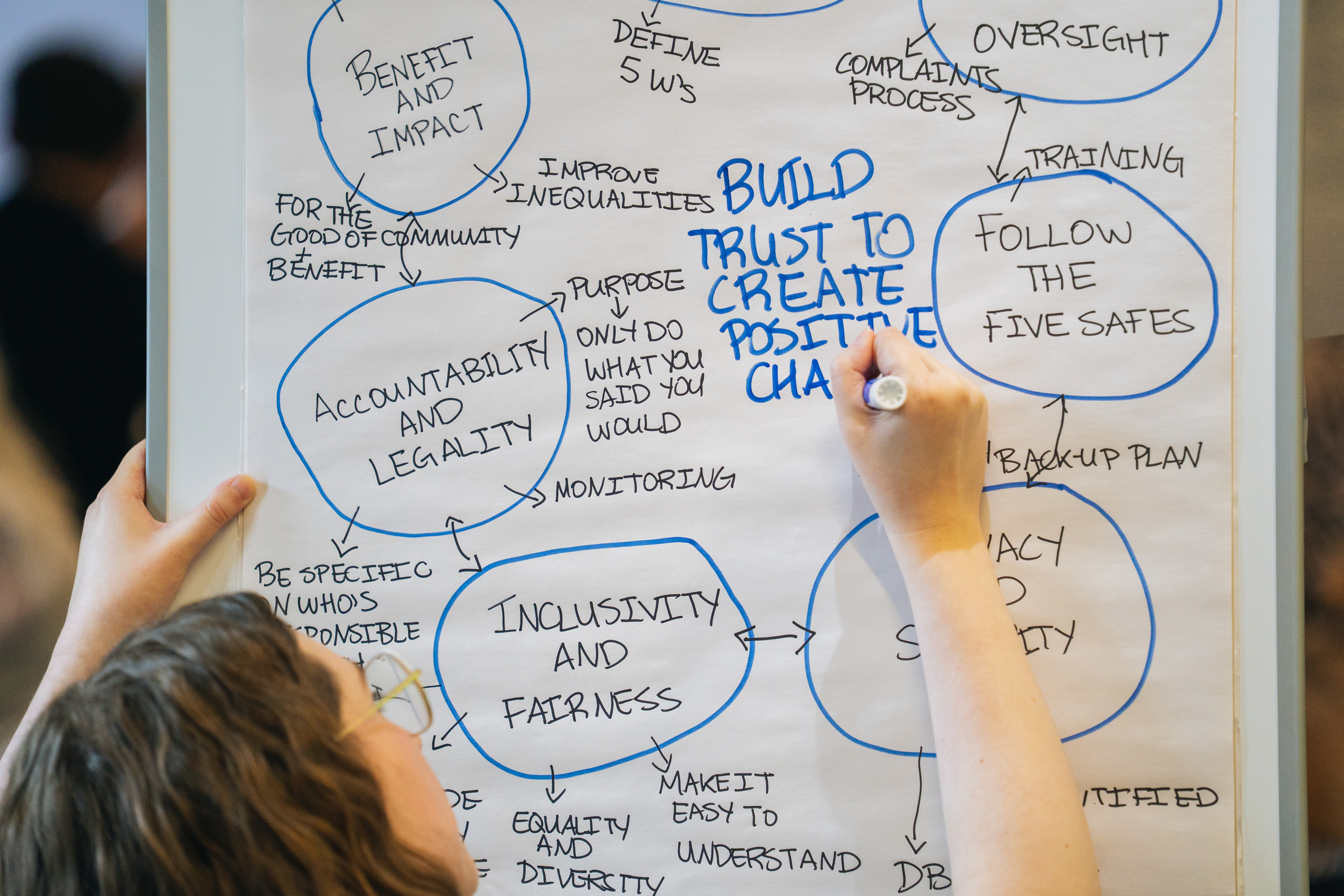

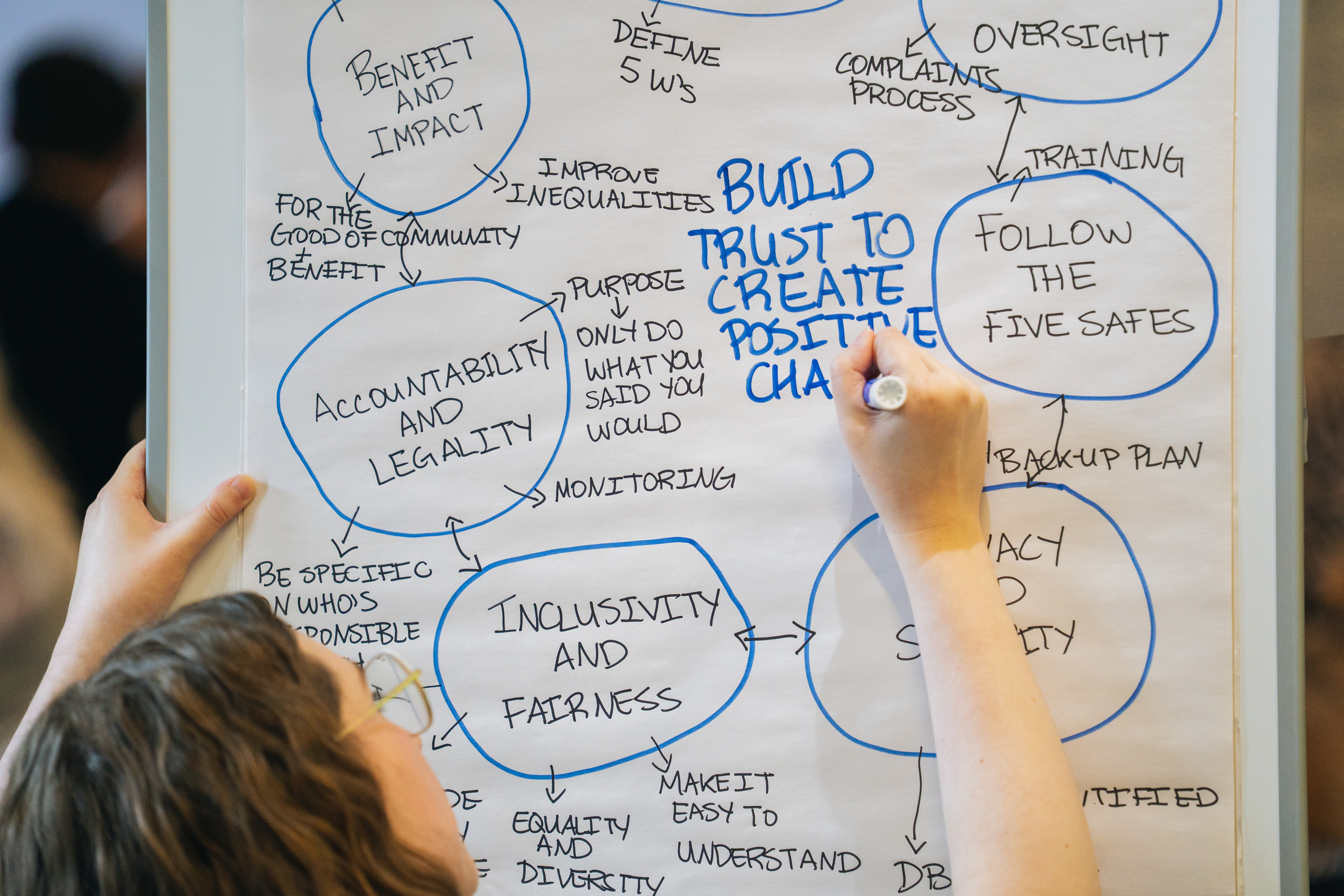

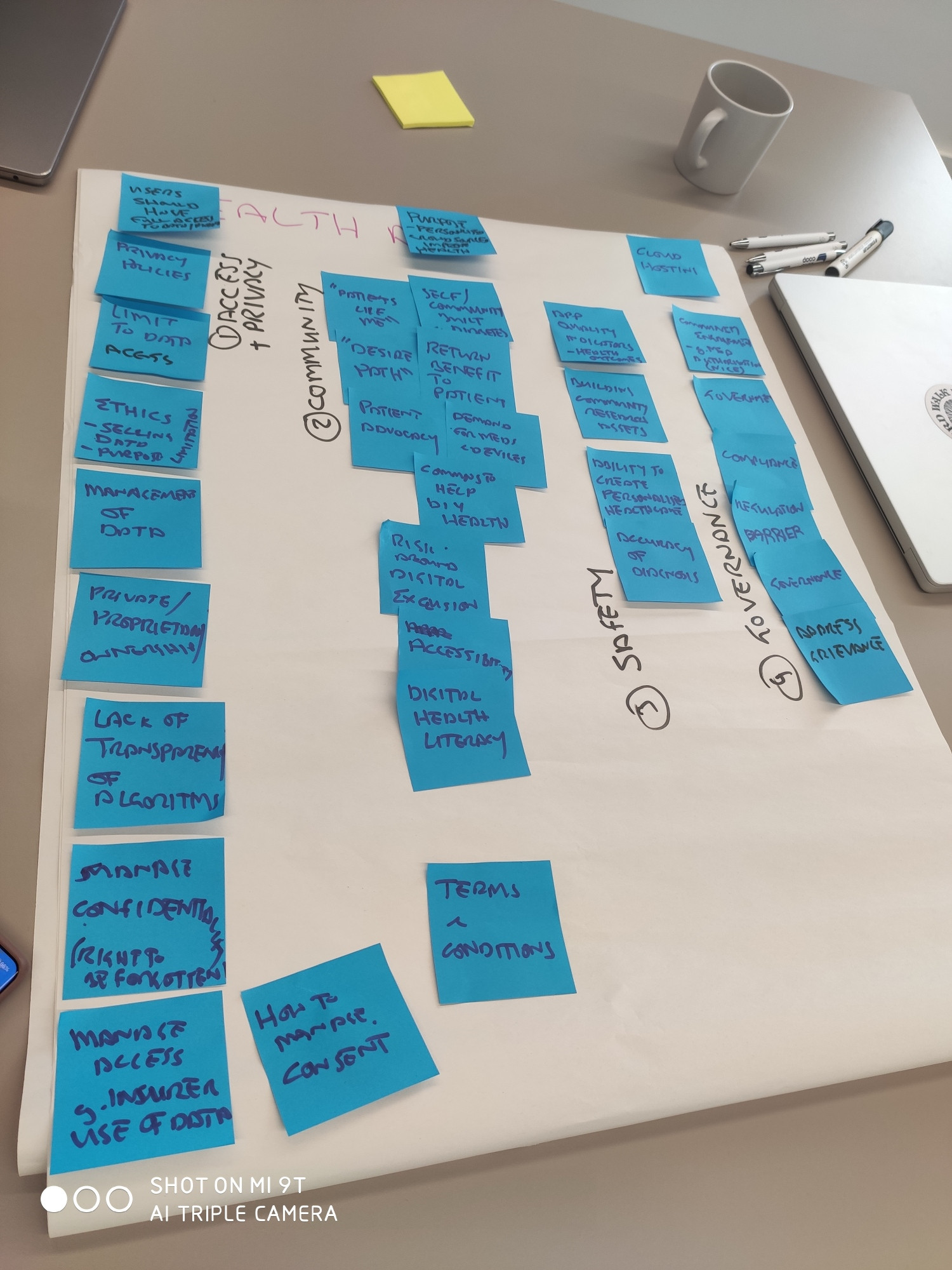

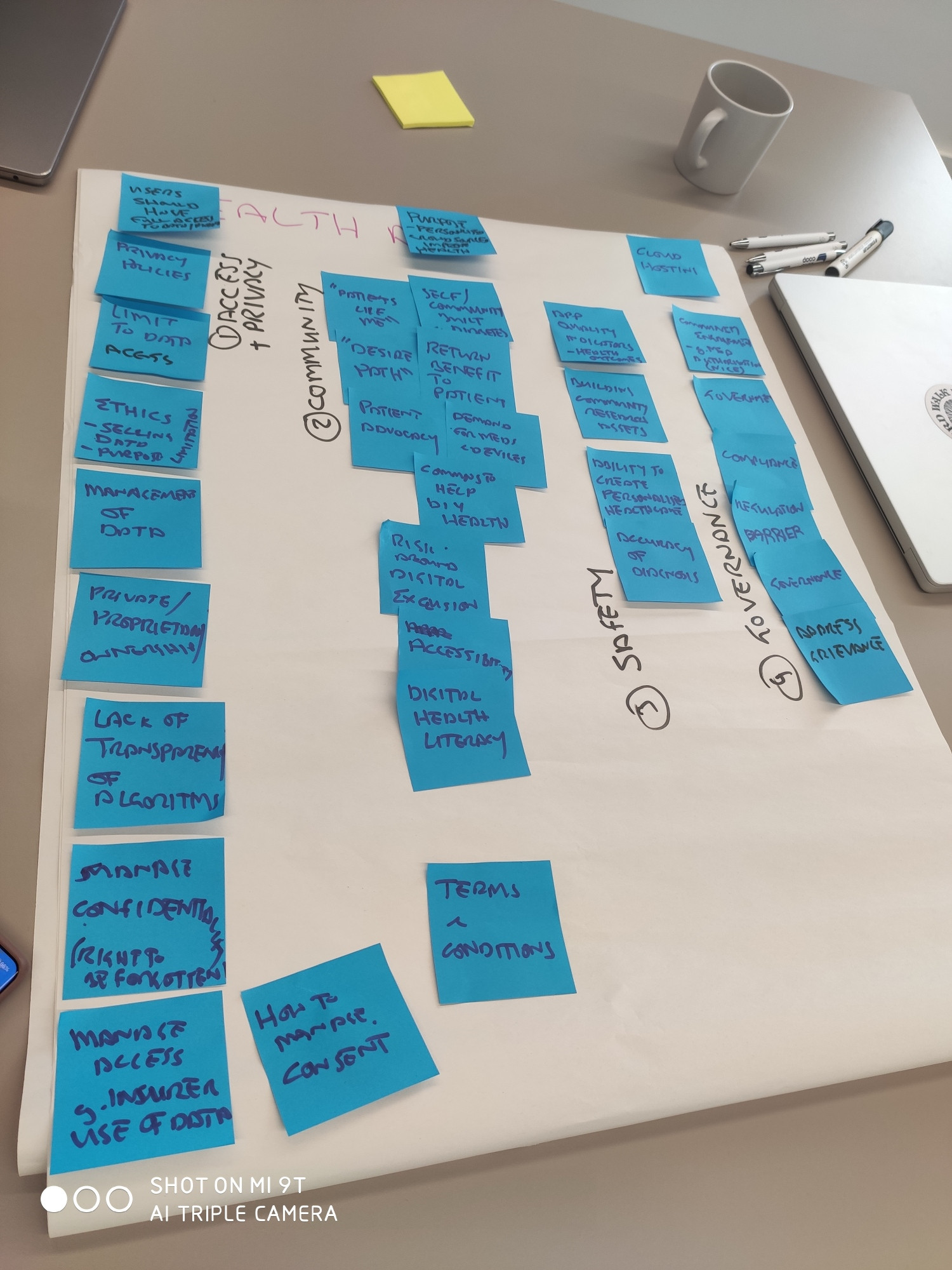

In March 2025, 59 residents of the Liverpool City Region came together to help us build a set of principles on what beneficial and trustworthy data and AI innovation looks like.

These 11 principles are now the building blocks of what each organisation in our charter must adhere to, for residents to continue sharing their data so that it can be used for good.

Examples of good include

- Using smart readers to track dementia patients’ energy use to improve their wellbeing and independence

- Tracking various types of data to prompt earlier intervention for children and families who need support

- Linking testing results with personal data to help prevent future outbreaks of disease

Our 11 principles

Principle #1 Beneficial:

Use data and AI for the good and benefit of the community and the Liverpool City Region (LCR). Where possible, benefits should reach wider society as well.

People and organisations should prioritise public benefit in all data and AI projects. Public good can mean a lot of things, from operational efficiencies to saving lives. Residents especially want to see data and AI used in improving existing services. While projects should focus on the City Region first, residents would like to see other areas benefit from the research and projects developed here.

Principle #2 Security:

Ensure that the five Safes (Safe Data, Projects, People, Settings, and Outputs) and the UK General Data Protection Regulation are being adhered to

It is expected that personal data about residents is kept safe. Projects and organisations should keep personal data secure when stored, transmitted, and processed. The Five Safes should be core principles for personal and identifiable data safety and security. All organisations must already follow the UK General Data Protection Regulations for information security.

Principle #3 Accountability:

Ensure accountability at all levels, including a declaration of responsibility for each data and AI project.

Design in accountability from the start. It should be clear who should take responsibility when something goes wrong in data and AI development and decision-making. That means defining who is responsible for:

- addressing bias in datasets and algorithms

- correcting and updating algorithms

- monitoring algorithms that are in use

- training front-line staff on algorithm use, benefits, and limits

Make it clear to service users who should be contacted if someone wants to challenge a decision made by an algorithm.

Principle #4: Transparency

Transparency: Inspire trust between organisations and residents by being honest in how data is collected, used, and implemented in projects.

Residents want to be able to know what is happening with their data at scale. Transparency is a key building block to trustworthy practice. It is expected that data and AI projects and how they use data is reported in a publicly accessible register. Descriptions of projects must be written in plain English and understandable to residents.

Principle #5 Inclusivity:

Promote fairness, universal access, and equity in the development of data and AI innovation. Ensure diverse and affected communities are involved and heard throughout the life of the project.

Residents want everyone to benefit from data and AI projects. State clearly who data and AI projects are intended to benefit. When relevant, consult and engage that community throughout projects. Ensure data and AI projects do not reinforce existing inequities.

Principle #6 Privacy:

Protect the dignity, identities, and privacy of LCR residents.

Privacy, in this case, is about residents’ ability to live a life free of the negative impacts of data and AI. There should always be an option to opt out of data sharing and AI use. This also includes keeping sensitive information private. Make sure that data reidentification does not harm communities. Data and AI usage in the LCR should not make community hardships and inequities worse.

Principle #7 Legality:

Keep up to date on legislation and policy changes on data and AI. Always abide by and adhere to the rule of the law.

Data and AI law are constantly developing in the UK. Under existing law, data breaches and misuse must be reported within organisations. They must then be escalated to the Information Commissioner’s Office where appropriate. All organisations are expected to meet the existing legal requirements for secure and safe data sharing. Examples include UK GPDR, the Common Law Duty of Confidentiality for health, as well as broader legislation like the Equality Act 2010.

Principle #8 Trustworthy:

Communicate on outcomes through a public register of data and AI projects. Flag projects which use AI to raise awareness.

It is expected that organisations and projects report back on the outcomes of their data and AI use in a publicly accessible register. This is a key way to demonstrate trustworthy practice as it communicates alignment to the other principles. For residents to trust organisational use of data and AI it must be understandable. That means flagging deployed projects which use AI.

Principle #9 Governance:

Ensure that actions have consequences. Projects and people must recognise that innovation with data and AI has risks. Harms and risks must be appropriately managed throughout the lifecycle of a projects.

Project descriptions should include sharing information on risks and mitigations. Projects could potentially lose the stamp of the Charter if they do not report on the risks and mitigations of their data use. The three original signing bodies are responsible for publicly reporting on these data and AI projects including risks in the aforementioned public register. They should create processes for overseeing this requirement.

Principle #10 Balanced Innovation:

Use Data and AI to drive innovation in the LCR. But, counteract negative impacts on the environment and wellbeing of future generations. Where data and AI negatively impact employment make sure this is offset but upskilling and benefits to community. Bureaucracy should not stifle community benefit.

Residents want the LCR to lead the way in community-led data innovation. Data silos and bureaucratic process should not prevent this progress. However, innovation should not cause harm. While AI may mean job losses, every effort should be made to retrain and upskill the local workforce. Residents want to see their community and environment protected. Environmental sustainability should be addressed in digital and physical data infrastructure.

Principle #11 Oversight:

An independent review takes place at minimum every two years on partners’ use of the Charter. Changes to the Charter are informed by this review.

Data and AI technologies are constantly evolving. It is expected that the Charter evolves with them as a living document. A review of the Charter and signing organisations’ use of data and AI is expected at least every two years. Organisations and projects will be kitemarked to the 11 principles. This review must be independent of signing organisations and inclusive of residents to ensure external oversight

Feel informed and empowered when it comes to data, read our charter

While principles of ethical data sharing are nothing new; this is a first of its kind charter because it is based on public engagement and with the promise to create space for new research to continually take place, so updates are continuous and reflective of what’s going on in the world around us.

As a resident of the LCR, your data will play a part in benefiting not only the region as a whole but your local area as well as the whole of society.

From better local health and education services to combatting the potholes and fly tipping that appear right on your doorstep – right through to keeping on top of health outbreaks, your data can be used safely and securely to support these issues.

To find out more about the work we’re doing and what we’re asking from our organisational partners, you can read our charter.

Feel informed and empowered, read our charter

Podcasts more your thing? We also have a series of episodes that explores how artificial intelligence is shaping our lives, hear from experts and ordinary people who live or work in Liverpool.

Listen here